Throughout my career I have been through more “Leadership” or “Managerial” training than I can remember, from the lead by example style when I was in the military to the corporate leadership (aka managerial) style that has more of a scientific approach. I have seen many styles come and go, and there are certainly no shortage of articles and trends that are published on a daily basis. Many times those of us who have been through the drill enough know what works and what doesn’t — in the words of Kenny Rogers when to hold them and when to fold them.

We tend to focus on the results we have achieved in the past with a given scenario, learning from our mistakes and ensuring we highlight successful efforts. In my observations we tend to do the same thing when it comes to implementing various frameworks whether it’s ISO, NIST, CoBIT, FAIR, ITIL, CERT-RMM, Diamond Model or Octave. You name it there is certainly a framework for it. Some people pluck the goodness from multiple frameworks and create their own; others will kneel to the altar of the chosen framework and swear allegiance to it for all time.

Leadership and management styles or skills can be viewed in much the same manner as there is always an interesting conversation when you ask someone the difference between leadership versus management, leading versus directing, mentorship versus oversight. The most glaring difference, however, is that one styles “Leadership” as more of a social mechanism and “management” as more of tools for your toolbox.

James Altucher published an article on the 10 things he thinks you should know in order to become a great leader, and there is a section that particularly caught my eye. Specifically he states:

Below 30 people, an organization is a tribe. 70,000 years ago, if a tribe got bigger than 30 people there’s evidence it would split into two tribes. A tribe is like a family. With a family you learn personally who to trust and who not to trust. You learn to care for their individual problems. You know everything about the people in your tribe. At 30 people, a leader spends time with each person in the tribe and knows how to listen to their issues. From 30-150 people you might not know everyone. But you know OF everyone. You know you can trust Jill because Jack tells you can trust Jill and you trust Jack. After 150 people you can’t keep track of everyone. It’s impossible. But this is where humans split off from every other species.

We united with each other by telling stories. We told stories of nationalism, religion, sports, money, products, better, great, BEST! If two people believe in the same story they might be thousands of miles apart and total strangers but they still have a sense they can trust each other. A LEADER TELLS A VISIONARY STORY. We are delivering the best service because…. We are helping people in unique ways because…. We have the best designs because…. We treat people better because…. A good story, like any story ever told, starts with a problem, goes through the painful process of solving the problem, and has a solution that is better than anything ever seen before. First you listened to people, then you took care of people, but now you unite people under a vision they believe in and trust and bond with.

How does this relate to the CISO role or anything else for that matter?

In my humble opinion, this topic and where you fall in it will decide if you will build and/or operate a successful cybersecurity program. Over the years I have built and run multiple teams performing all kinds of functions and not just in the technology space, but also in the military, emergency response, heck, even running a kitchen staff when I was in high school, and — success or failure — it always felt “right.”

Here’s why. As Mr. Altucher defined so well, I have a tribal leadership style and as I think back in time as I write this I have set up my cybersecurity programs both past and present in the tribal manner, but never really defined it that way until now. In business terminology, in each instance upon walking through the door for a new organization I have always assessed the landscape of the cybersecurity products, services, programs and projects. Usually reorganizing employees and operations to be collaborative, efficient, and effective. However, in another view I was also organizing the cybersecurity program into multiple tribes.

These tribes sat together, supported one another, collaborated together, gave and received advice and supported each other’s decision. They received mentorship as well as the vision for the tribe on what mission success should look like. I backup my tribes and they back me up, always seeking out facts and making sure everyone’s covered.

For those of you with military or police and fire types of background, you can certainly relate to what I am talking about. When you think about this concept and observe your own current corporate culture, are you tribal? Are the functional teams supporting one another, giving and receiving advice and collaborating freely? Are you backing your tribes up and are you backing them up?

If not here are some advisory tidbits I would recommend:

- View your leadership style through a social aspect. Treat your management style as tools for your tool box. Do not treat your tribes as tools.

- Do you differentiate between program and projects? Programs have outcomes and projects have outputs. I lead my tribes as a program and want a successful outcome. Therefore, my tribes don’t have milestones or deadlines; they have only mission success or not.

- Keep your tribes small and focused. I commonly use the term “high speed and low drag.” This supports organizational resilience. When you’re breached and need to pivot, this is the optimum way; empire building does not mean success.

- Do not build your tribes solely around a standard or framework. If you focus solely on industry standards or cybersecurity frameworks you will fail. Build your tribes based on outcomes and whatever means mission success in your organization. Do not try and build a tribe into columns, rows, and cells.

- Be willing to change. If you are in your workspace as you read this and as you survey the landscape around you it feels like a scene from the movie Office Space, you should reflect on that for a few minutes and maybe think about some ways to change it.

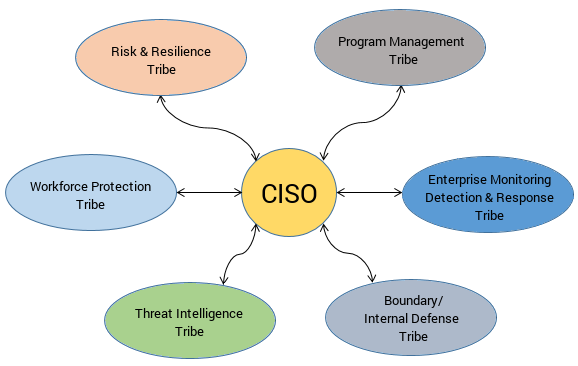

- Observe the below simple diagram:

- It is not a top down org chart; it is a tribal “system.”

- Each tribe would have its own products and services they would be responsible for as well as the mission goals and outcomes.

- From an operations standpoint you are leading an ecosystem with an environment that changes every day, hundreds of times a day. Define what “normal” looks like and observe and react when something “abnormal” occurs.